- California Assembly OKs highest minimum wage in nation

- S. Korea unveils first graphic cigarette warnings

- US joins with South Korea, Japan in bid to deter North Korea

- LPGA golfer Chun In-gee finally back in action

- S. Korea won’t be top seed in final World Cup qualification round

- US men’s soccer misses 2nd straight Olympics

- US back on track in qualifying with 4-0 win over Guatemala

- High-intensity workout injuries spawn cottage industry

- CDC expands range of Zika mosquitoes into parts of Northeast

- Who knew? ‘The Walking Dead’ is helping families connect

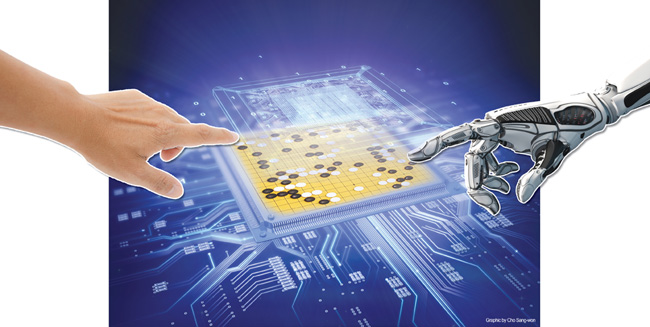

Human vs. Artificial Intelligence

World Go champion will face Google supercomputer on March 9

By Lee Han-soo, Park Si-soo

D-day is upon us.

For one week from March 9, top-ranked Go (baduk) player Lee Se-dol of Korea will engage in a merciless one-on-one fight with highly advanced artificial intelligence (AI) program AlphaGo in a five-game showdown in Seoul.

Whoever wins, the match’s outcome will lay the groundwork for an answer to a long-held question of whether AI will be able to prevail over the human brain.

Lee’s crushing victory would provide a deep sigh of relief to his human peers who feel increasingly uneasy about AI’s invasion into segments once dominated by people. But what if AlphaGo wins? They might draw up a picture of a dystopian-like future in which humans are left helpless and treated like marionettes tightly controlled by a robot brain, as portrayed in dismal sci-fi flicks such as “I, Robot.”

AlphaGo’s developer, Google DeepMind, a British tech firm Google acquired in 2014, says the computer brain will make its way into segments that require creative thinking, indicating the already simmering tensions between humans and AI will intensify.

Many experts cite Go ― the ancient board game known as Baduk in Korea and Weigi in China ― as one of the best methods to test AI’s creative thinking because of its intuitive nature and complexity.

AI has long surpassed humans in other games, including chess. But Go still remains a human-friendly arena. Last October, AlphaGo crushed European Go champion Fan Hui, but he falls behind world champion Lee in many ways.

At least for now, both sides seem confident they will prevail.

“To tell you the truth, the match between Pan Hui and AlphaGo did not really meet the standards to have a match with me,” Lee said during pre-match press briefing at the Korea Baduk Association in Seoul, on Feb. 22. “However, I’ve heard that it is constantly being updated as we speak, so I believe it will be more challenging than October. Still, it doesn’t change the fact that I have the upper hand. Truthfully, it is about me having a perfect game or not.”

According to Google DeepMind, the AI program has a self-learning ability.

“This really is our Deep Blue moment,” Demis Hassabis, Google DeepMind’s president of engineering, said early February at the American Association for the Advancement of Science’s annual meeting in Washington.

Hassabis said most Go players are giving Lee the edge over AlphaGo. “They give us a less than 5 percent chance of winning … but what they don’t realize is how much our system has improved,” he said. “It’s improving while I’m talking with you.”

For Hassabis, the AlphaGo project is much more than beating one of the world’s best Go players. He said the principles that are put to work in the program can be applied to other AI challenges, ranging from programming self-driving cars to creating more humanlike virtual assistants and improving the diagnoses for diseases.

“We think AI is solving a meta-problem for all these problems,” he said.

How AlphaGo actually works?

Many are wondering what has made AlphaGo so successful where other AIs have failed?

“The traditional search tree over all possibilities does not have a chance in Go,” said Hassabis.

This is why the Google DeepMind team developed two neural processes to create a new system for AlphaGo.

Consider Go as a whole tree of possibilities, almost stretching to infinity. What AlphaGo uses is the two neural networks to narrow possibilities. It uses the policy network to judge which of the moves is most likely and it should consider. This cuts down the width of the search tree. The other value network tells AlphaGo what move is better for both white and black. This shortens the depth of possibilities.

While the Deep Blue supercomputer considered about 2 million moves before making a move, AlphaGo only considers about 100,000 moves. Of course this is more than a human expert who can look at about 1,000 moves.

DeepMind trained the two neural networks individually.

It used the policy network to mimic the plays of a pro Go player, then improved this system by letting the computer play about 13 million times. Finally, DeepMind took one black or white side from each set and made a new data set.

For network value, DeepMind used this data set and made it determine who would win at each position inside the game. From there, the DeepMind team took the two networks and combined them using the Monte Carlo tree search to get the final AlphaGo.

“So, basically, AlphaGo is a system that learned from itself and then we used this to write an algorithm. So, in fact, this is close to how humans learn and play Go,” said Hassabis.

Match details

The five matches between Lee Se-dol and AlphaGo will be held at the Four Seasons Hotel, Seoul, starting at 1 p.m. KST on: March 9, 10, 12, 13 and 15.

The matches will be played under Chinese rules, with a komi (compensation points for the player who goes second) of 7.5. Each player will receive two hours per match and three lots of 60-second byoyomi (countdown periods after they have finished their allotted time).

The game will be live streamed through DeepMind’s YouTube channel as well as Korea’s Baduk TV. It also will feature on China and Japan Go TV broadcasts. English and Korean commentaries will be available, with Michael Redmond, a professional Western Go player, commentating in English.